As part of our Innovation Hub, a group of engineers committed to exploring emerging tech hands-on, I recently ran a small but meaningful experiment. The idea? Creating a proof of concept (PoC) that merges computer vision with AI, all within a React Native app.

Why does this matter? Well, in the world of IoT, there’s often a frustrating gap: how do you get digital instructions to smoothly translate into real-world, physical actions? This experiment directly tackles that challenge.

Here’s how it went.

Our Computer Vision + AI Experiment in React Native

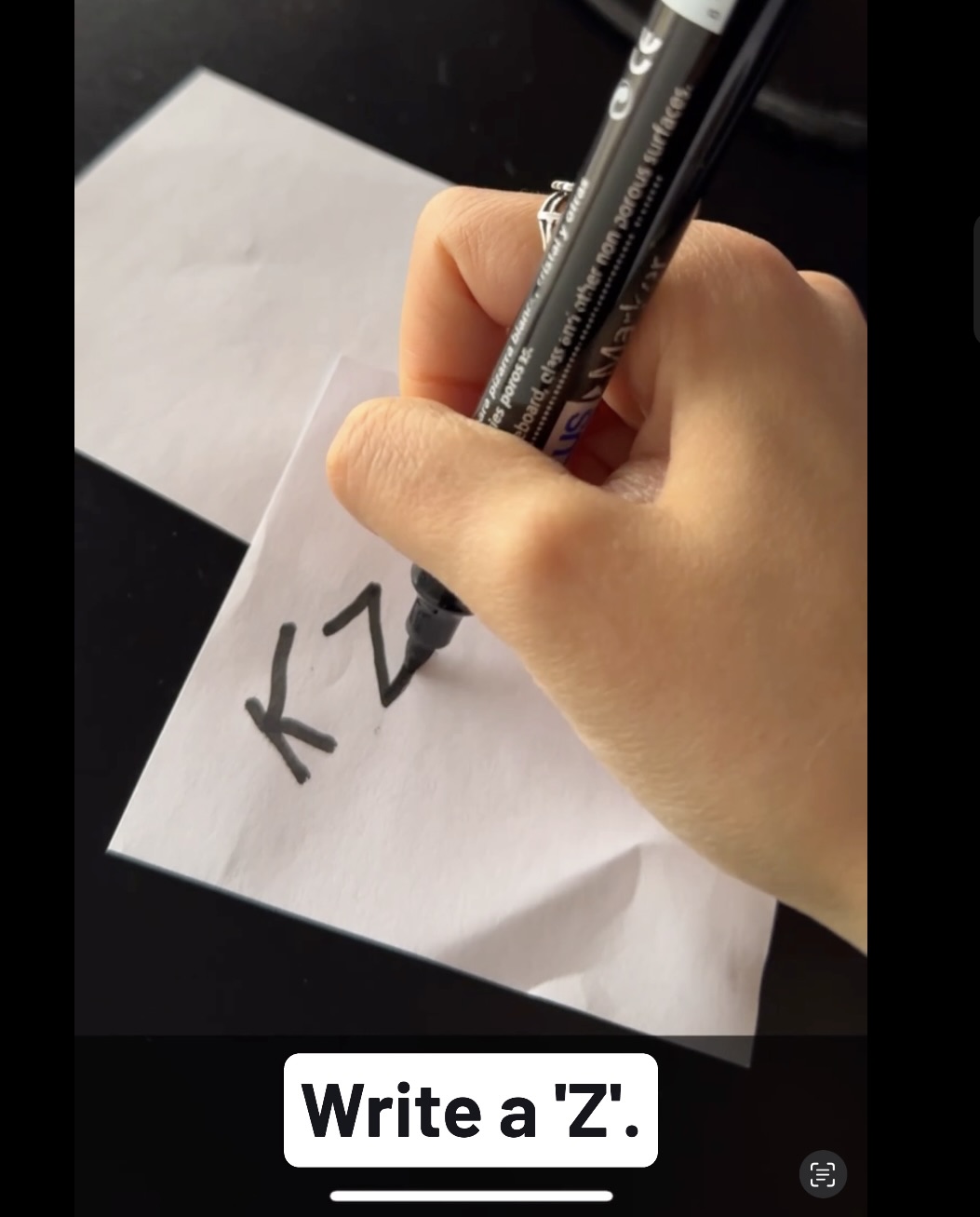

Imagine you need to draw your name on a piece of paper. Instead of just looking at an example, your phone’s camera acts as your guide, giving you live instructions straight from GPT-4o (a multimodal large language model that processes images + text).

Every three seconds, the app captures a frame from the camera, turns it into a Base64 image, and sends it off to the ChatGPT Vision API. Based on what it “sees”, it then streams back real-time, step-by-step guidance right onto your screen, like:

- “Grab the marker”

- “Take off the cap”

- “Draw the line”

The AI-powered visual assistant lets an app (on the user’s phone) guide someone step-by-step through a physical task (like drawing or interacting with a device) in real-time, using the camera. It transforms passive instructions into an active, interactive guide.

This kind of interaction is incredibly relevant in IoT contexts, where hardware needs to bridge the gap between digital instructions and real-world actions. It’s about making complex tasks intuitive and reducing errors in the field.

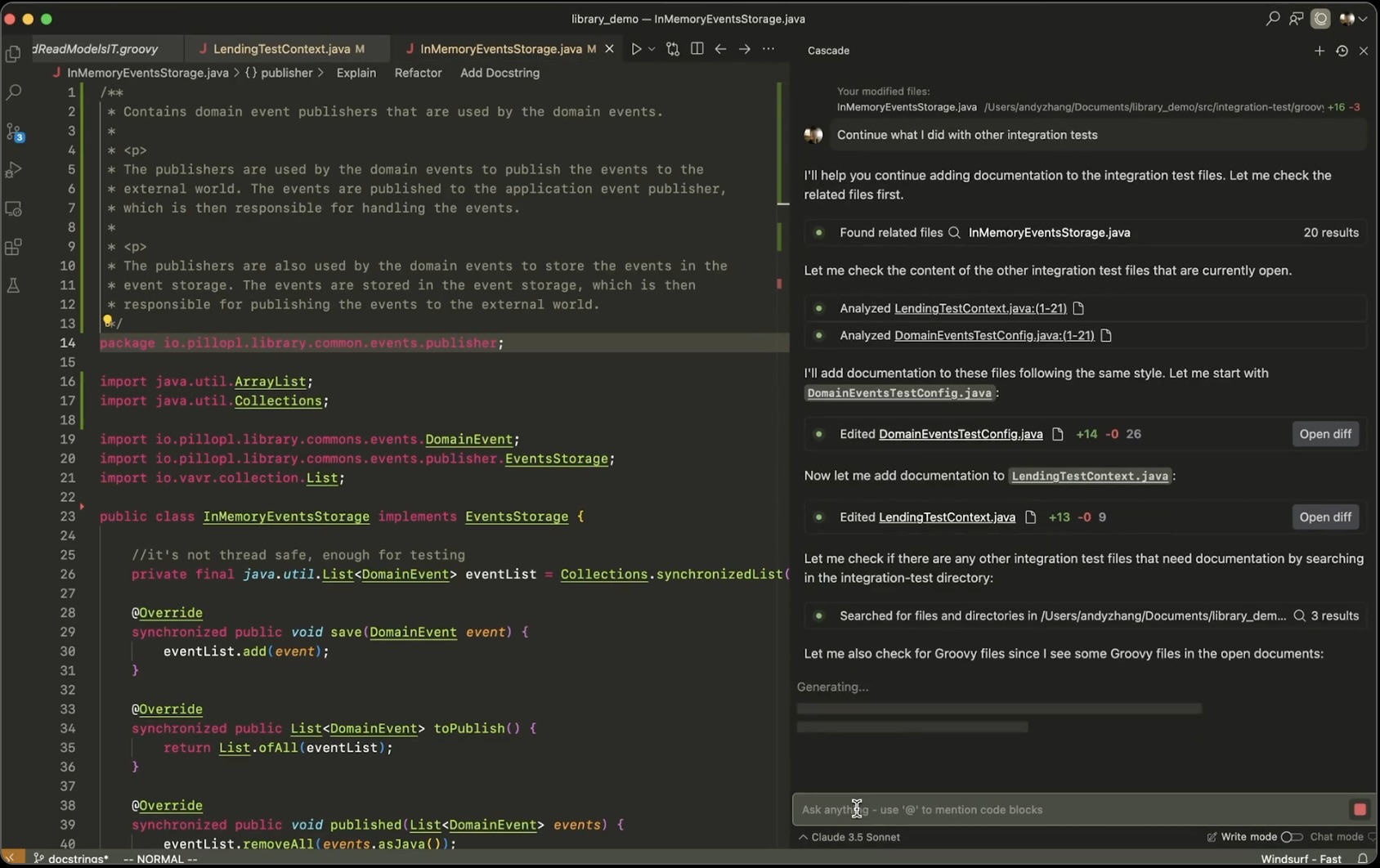

The React Native Setup: Our Computer Vision PoC

We gave ourselves a tight two-week window for this experiment, with some clear ground rules:

- Tech Stack: We relied on React Native, paired with react-native-camera-vision for the camera capabilities.

- Data Flow: Capture → Base64 → ChatGPT Vision API → Streamed response → On-screen text

- Scenarios Tested: We walked through a few basic situations to see how the AI would react:

- No marker → “Grab the marker”

- Marker with cap → “Take off the cap”

- Marker uncapped, paper visible → “Draw the line”

- No marker → “Grab the marker”

Insights from Our AI & Computer Vision Experiment

Like any experiment, we ran into unexpected outcomes:

- Streaming wasn’t a must-have: We initially thought real-time streaming would be crucial for responsiveness, but standard API requests proved to be plenty fast enough for a smooth user experience.

- One frame every 3 seconds was the sweet spot: This interval hit the perfect balance between feeling responsive to the user and managing our API token usage efficiently.

- Token costs were surprisingly manageable: Each request/response cycle consumed around 490 tokens, with most of that coming from the image input itself.

- We expanded on the fly: We pushed the boundaries a bit, adding steps like “look for a piece of paper” and even challenged the assistant to guide us through writing the full word rather than just a simple line.

In our daily work with IoT companies, we build software that lives and breathes in physical spaces (think of warehouses, supermarkets, sensor installations). That’s where this kind of vision+AI combo can shine.

This particular PoC wasn’t just a fun side project; it helped us:

- Explore real-time visual assistance directly within mobile applications.

- Experiment with “low-friction” computer vision, using readily available tools like React Native and ChatGPT to get quick insights.

- Build crucial internal know-how that we can directly apply to solve real challenges for our clients.

What’s Next for AI-Powered IoT Solutions?

The next iteration is already brewing. We’re exploring ways to replicate this behavior locally, no external API calls, lower latency, better privacy. Tools like TensorFlow Lite or MediaPipe are on our radar.

This experiment was just the start. But it’s part of a broader strategy: build internal fluency in emerging tools that can create real value.